Featured Use Case: Why a Large Government Entity Replaced Their SIEM with MixMode

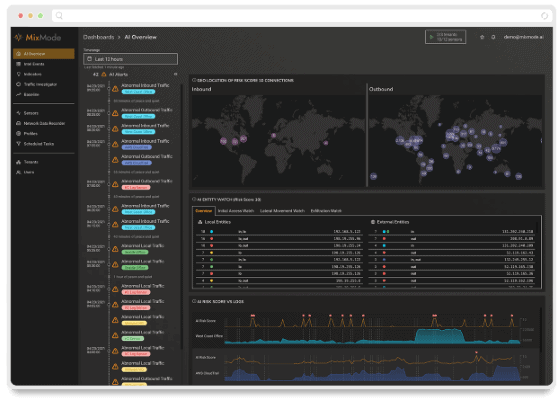

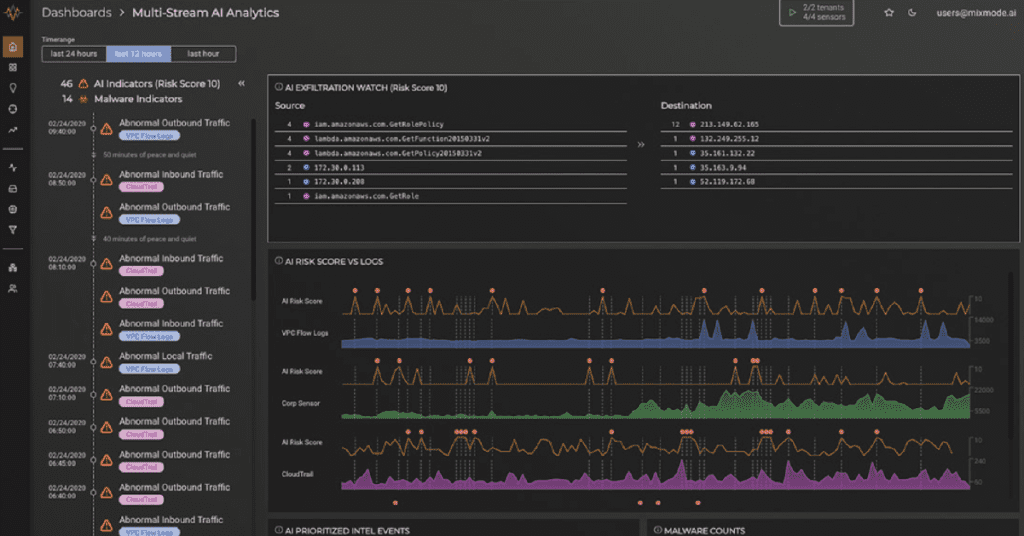

Despite a three-year SIEM deployment and a two-year UBA deployment, government personnel needed an alternative to better detect and manage threats in real-time, as well as an improved platform for gathering comprehensive data.