How Do We Protect Autonomous Vehicles?

That’s the question on everyone’s minds as we hurdle towards a future where that is very much the reality.

We’re just a few years away from seeing autonomous vehicles hit the road, and when they do, the world of cybercrime will have a whole new sector to worry about.

These vehicles will have to have mechanisms in place to defend from a hacker being able to take over the system, allowing for disaster to occur.

In an article titled “Cloud Breaches Like Capital One Will Strike At Self-Driving Cars,” published in Forbes this week, author Lance Eliot said, “The AI system driving the car generally needs live data at the time the data is collected and doesn’t need prior data quite as much. That being said, the prior or previously collected data can be a treasure trove for doing Machine Learning and Deep Learning, allowing the AI system to improve its driving capabilities over time by analyzing and “learning” from the amassed data.”

We will need to be prepared to stop hackers as they attempt to breach the network, something the industry is disappointingly far from being able to do.

Right now the mean time to respond to an incident is 69 days and 197 days to identify, according to the Ponemon Institute, and that is absolutely unacceptable.

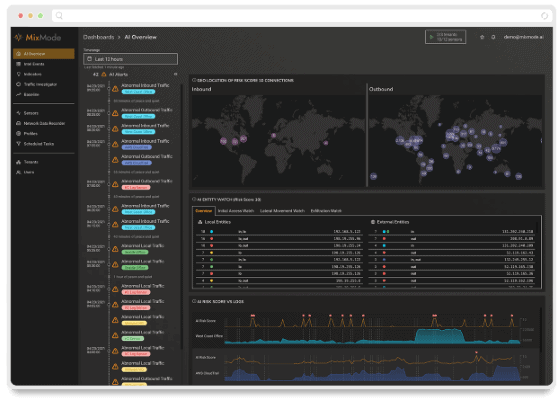

Luckily there are emerging platforms that offer much faster response times leaking onto the scene like MixMode which can boast an impressive five-minute window between a breach and its detection.

Using third-wave AI, MixMode’s proactive network security monitoring platform provides unparalleled visibility and best-in-class forensics enabling real-time identification and remediation of the threats that truly matter.

There have been no reports to date that show an attempt to hack a self-driving vehicle, but that in itself is a problem. The same issue was present when the first e-commerce platforms were developed in the 1990s. No one attempted to hack them initially, then there was a big round of hackas that forced Microsoft into becoming one of the most secure operating systems today.

The same may happen with automobiles, but this time there is much more at stake. Before anyone gets into a self-driving car they should consider the security of the vehicles network just as much as they would the physical security of the vehicle. Read More

AI is Outpacing Human Ability

The battle royale of human security teams versus AI-developed attacks rages on as companies struggle to protect themselves from the ever advancing threat of technologically advanced hackers. The problem is as follows: there aren’t enough human workers available in the field and the amount of data they have to sift through is impossibly large, however most AI isn’t advanced enough to do it as accurately as a human being could.

According to an article published this week by Help Net Security, AI was demonstrated outcompeting human capabilities at Black Hat 2018 where IBM displayed the potential impact of a deep learning-based ransomware, Deep Locker. The ransomware successfully compromised a face recognition algorithm to autonomously select which computer to attack with encrypted ransomware.

This demonstrates an AI-driven attack that only an extremely advanced AI system could detect and prevent from wreaking havoc. As hackers begin to arm themselves with artificial intelligence, we too have to adopt it into our defences, or get left behind. Read More

How AI Can Solve Staff Burnout

Cybersecurity industry people are overworked, overwhelmed and could use some help as their job is only growing more massive as the years pass.

However, according to this article published by Security Intelligence, there is hope that AI can come in and solve many of these problems if applied properly.

“With the cyber threat landscape being what it is, really all we have here is a simple case of piling on. You see, if you use cybersecurity AI like a surgical tool, you begin to lighten the burden on your staff. AI can do things like munch away at mountains of data at incredibly high speeds. Therefore, at least in theory, the result of cybersecurity AI doing the heavy lifting should be: Staff becoming more efficient in their productivity, since they no longer feel overwhelmed; and staff having increased ability to keep up with new threats and technologies,” wrote George Platsis for Security Intelligence.

Artificial Intelligence like that developed by cybersecurity company MixMode can reduce false positives for security teams and is able to detect a breach within five-minutes. Just these two tasks eliminate most of a security professional’s daily work, so that they can focus on stopping hacks rather than just looking for them. Read More

MixMode Articles You Might Like:

Top 5 Ways AI is Making Cybersecurity Technology Better

What is Network Detection and Response (NDR)? A Beginner’s Guide

The Tech Stack Needed to Start an MSSP Practice: Firewall, SIEM, EDR and NDR

AI-Enabled Cybersecurity Is Necessary for Defense: Capgemini Report

Web App Security: Necessary, Vulnerable, and In Need of AI for Security