Attacking and Defending with Artificial Intelligence

Often when discussing Machine Learning and AI in the cybersecurity space, we focus on the potential defense capabilities of AI systems. However, it’s time we start paying attention to the other side as well, as hackers begin to arm themselves with similar technologies.

Industry practitioners believe that we will see an AI-powered cyber-attack within a year; 62% of surveyed Black Hat conference participants seem to be convinced in such a possibility, and when that happens, we have to be prepared or suffer the consequences.

In an article for Info Security, Leron Zinatullin describes various uses of AI in cyber-crime. The first is resource efficiency as mentioned previously in this blog, there are too few cybersecurity professionals available for the number of open jobs on the market — a void AI could help fill.

The ability to analyze large data sets helps hackers prioritize their attack victims based on their wealth or online behavior. Models can go so far as to identify which victims are most likely to pay out a ransom.

“Imagine all the data available in the public domain, as well as previously leaked secrets, through various data breaches, are now combined for the ultimate victim profiling in a matter of seconds with no human effort,” said Zinatullin.

When the victim is selected, AI can then be used to customize emails and sites that would be most likely clicked on based on their data.

This inevitably leads to an increase in scale and frequency of highly targeted spear phishing attacks.

The sophistication of such attacks will only increase in the future when AI can mimic the human voice thanks to rapid developments in speech synthesis.

To protect against these highly intelligent attacks, an enterprise needs to be armed with the appropriate defense technology. Already there are products emerging on the market that tackle these issues and will continue to prevent advanced attackers. Read more

Dell and Microsoft Pour Millions into AI Startups

Dell and Microsoft are funding start-up AI companies to help them grow and help plug them into their product development, sales and distribution networks.

Venture capital investors are investing in AI cybersecurity start-ups that have products to help companies improve efficiency and their ability to manage and train their workforces.

As unions, corporations and governments debate what effect artificial intelligence, machine learning and automation will have on the future of the workforce, venture capital investors are identifying the most interesting start-up investments that may help steer this historic paradigm shift.

Both aim to advance human progress and focus on start-ups they can mentor with their companies’ own technical expertise and market know-how.

Lori Ioannou wrote about the investments in an article for CNBC.

“Our goal is to get a window on innovation,” says Scott Darling, president of Dell Technologies Capital, who notes that Michael Dellreviews every single deal the fund invests in. “We need to plug into the external entrepreneurial ecosystem. This is so important, since the pace of technology is stunning.”

As Darling explains, the ROI on successful start-ups is so large that the dollars flowing into these ventures by investors has boomed. That’s because the market for these technology products is typically huge. In Dell Technologies’ case it has invested more than $600 million in about 100 investments over the last six years. Read more

Current-Use Capabilities of AI in Cybersecurity

Cybersecurity involves a lot of repetitiveness and tediousness. This is because identifying cyberthreats requires scanning through large volumes of data and finding anomalous data points. Companies can use the data collected by their existing rules-based network security software, like a SIEM, to train AI algorithms towards identifying new cyberthreats.

Understanding the attack better and being able to respond in a timely fashion requires more than just extra logs that a SIEM can provide, and it takes too long for a human security team to go through all that data.

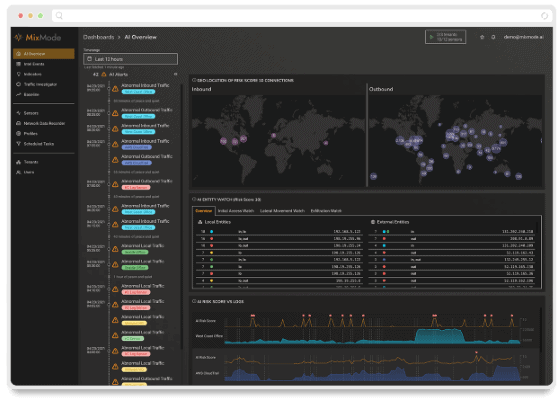

This is what emerging cybersecurity companies like MixMode are trying to solve by programming their AI to be capable of distinguishing between false positives and real threats.

AI algorithms can be trained to take certain predefined steps in the event of an attack and over time can learn what the best response is. Human security experts cannot match the accuracy, speed and scale at which AI software can accomplish these data analysis tasks.

Large-scale data analysis and anomaly detection are some of the areas where AI might add value today in cybersecurity, according to his article by Raghav Bharadwaj for Emerj which lists several reasons why having an AI cybersecurity system is paramount in the changing digital landscape. For example:

- AI for Network Threat Identification

- AI Email Monitoring

- AI-based Antivirus Software

- AI-based User Behavior Modeling

- AI For Fighting AI Threats

At the end, Bharadwaj states, “The one challenge for companies using purely AI-based cybersecurity detection methods is to reduce the number of false-positive detections. This might potentially get easier to do as the software learns what has been tagged as false positive reports. Once a baseline of behavior has been constructed, the algorithms can flag statistically significant deviations as anomalies and alert security analysts that further investigation is required.” Read more

That’s exactly what we created the MixMode Platform to do. Let our AI sift through the false positives for your team so they can focus on more important security measure. To book a demo: www.mixmode.ai

By Ana Mezic, Marketing Coordinator at MixMode