What are AI Generated Attacks?

AI-generated attacks refer to cyber threats that leverage artificial intelligence and natural language processing to deceive and compromise individuals, organizations, and systems. Threat actors with malicious intent use AI-powered models and tools to generate convincing phishing emails, social engineering messages, and other AI-generated text that bypass traditional security measures. These attacks are becoming increasingly sophisticated, mimicking the language and style of legitimate emails to trick users into disclosing sensitive information or committing fraudulent activities.

AI-generated attacks exploit machine learning and language models' capabilities to craft personalized phishing emails that are difficult to detect. By analyzing vast amounts of data and human intelligence, AI-enabled tools can generate highly convincing emails, contain minimal grammatical errors, and use authentic language. The aim is to successfully deceive individuals and gain access to their personal or sensitive information.

These attacks pose significant challenges for cybersecurity professionals and security teams. The introduction of AI-based tools in cybersecurity has expanded the attack surface, making it more challenging to identify indicators of compromise and thwart attacks. Adversarial attacks, where AI-generated content is used to manipulate or deceive systems, can undermine security standards and put national security at risk.

Types of AI-Generated Attacks

One common type is the use of AI to generate convincing phishing emails. Threat actors leverage AI-powered models and language generation techniques to craft emails that appear legitimate and deceive recipients into revealing sensitive information or performing malicious actions.

Another type of AI-generated attack is manipulating social media and online platforms. AI algorithms can amplify propaganda, spread misinformation, or manipulate user behavior. This presents a significant challenge for businesses and consumers as it can lead to reputational damage, loss of trust, and even influence public opinion.

Additionally, AI-generated attacks can involve the creation of deepfake content. Deepfakes are manipulated audio, video, or images that convincingly mimic real people, often used to spread false information or defame individuals. These attacks can have severe personal and professional implications, including identity theft and reputation damage.

The characteristics and techniques used in AI-generated attacks include the ability to generate highly convincing content, mimicking the language and style of genuine communications. AI algorithms analyze vast amounts of data and human intelligence to create messages with minimal grammatical errors, making them difficult to differentiate from legitimate communications. Moreover, AI algorithms can adapt and learn from previous attacks, continuously improving their techniques and evading detection.

Impact of AI-Generated Attacks

AI-generated attacks have a significant impact on various aspects of society and individuals. These attacks exploit the advanced capabilities of artificial intelligence, particularly in natural language processing, to create sophisticated and convincing cyber threats. With the ability to generate highly realistic and personalized phishing emails, AI algorithms can trick even the most vigilant users. The consequences of successful AI-generated attacks can be severe, ranging from financial loss and identity theft to reputational damage and manipulation of public opinion. These attacks challenge traditional security measures and require security teams to adapt and strengthen their defenses constantly. It is crucial for cybersecurity professionals and businesses to understand the potential threats posed by AI-generated attacks and implement appropriate security tools and measures to mitigate the risks. Additionally, policymakers and national security agencies must adapt their threat models and security standards to address the evolving landscape of AI-generated threats.

Threats to Businesses and Consumers

One significant risk associated with AI-generated attacks is the potential for data security breaches. These attacks can bypass traditional security measures, such as multi-factor authentication, by generating convincing phishing emails that appear legitimate. This increases the likelihood of individuals falling victim to these attacks and divulging sensitive information.

The consequences of AI-generated attacks can be devastating. Businesses may suffer financial losses due to fraudulent activities and identity theft. Additionally, such attacks can damage the reputation of both companies and individuals. AI enables attackers to create sophisticated and personalized phishing emails, making it more difficult for security teams to detect and prevent them.

Potential for Increased Cyber Threats

Artificial intelligence (AI) and natural language processing (NLP) advancements have significantly empowered threat actors, leading to the emergence of sophisticated AI-generated attacks. These attacks substantially increase cyber threats, requiring businesses and consumers to be aware of this evolving threat landscape.

AI-generated attacks leverage language models and AI-based tools to craft compelling and personalized phishing emails. These attacks bypass traditional security measures and exploit human vulnerability by mimicking legitimate emails. This technique increases the success rate of these attacks, making it more difficult for security teams to detect and prevent them.

Challenges for Security Teams

Security teams face numerous challenges in addressing AI-generated attacks due to their sophisticated nature and ability to bypass traditional security measures. These attacks leverage language models and AI-based tools to create convincing and personalized phishing emails that mimic legitimate ones. This makes it difficult for security teams to distinguish between malicious and authentic emails, increasing the risk of successful attacks.

Here are some key challenges security teams face in defending against AI-generated cyberattacks:

- Highly dynamic threats - AI attacks can continuously modify tactics, techniques, and procedures to avoid detection. Hard to build effective static defenses.

- Detection evasion - AI allows attackers to optimize malware, phishing lures, network traffic, and more to hide from rules, signatures, and training data. Hard to spot anomalies.

- Increased scale & speed - AI dramatically boosts the automation and efficiency of attacks. Hard for human analysts to keep up.

- Blindspots in modeling - AI models have gaps in detecting novel attacks not represented in training data. Attackers exploit these blindspots.

- Difficulty attributing attacks - AI makes it harder to attribute attacks to specific groups based on TTPs, which are constantly changing.

- Skill gaps - Defending against AI attacks requires specialized data science and ML skills that security teams often lack. Hard to find this talent.

- Lack of labeled training data - Defensive AI relies on large volumes of representative, labeled data on attacks - which is scarce.

- Data isolation - Defensive insights learned from attacks in one organization aren't shared to benefit other defenses.

- Explainability issues - Inability to explain the logic behind AI model detections hinders investigation, tuning, and collaboration.

Large Language Models Used in Attack Generation

Generative AI tools, such as large language models, can analyze vast amounts of data and learn from it to create authentic-sounding content. They can produce convincing phishing emails, personalized messages, or other forms of communication with malicious intent. These models can even imitate the writing style of specific individuals or organizations, making their attacks more difficult to detect.

However, using language models in AI attacks also introduces significant risks and vulnerabilities. Attackers can leverage these models to bypass traditional security measures and launch highly sophisticated attacks. For instance, AI-generated text may lack the grammatical errors and anomalies typically associated with fraudulent activities, making it harder to identify malicious activity.

Furthermore, threat actors can quickly analyze data and craft targeted attacks at scale by employing AI-enabled tools. This heightens the potential for identity theft and the compromise of sensitive information. Security teams often need help to keep up with the constantly evolving techniques employed by malicious actors, as AI-generated attacks are designed to outsmart security tools and standards.

Understanding the Intent of Bad Actors

Bad actors who engage in AI-generated attacks have various motivations and objectives for their malicious actions. By exploiting the capabilities of AI and language models' capabilities, they can enhance their attacks' effectiveness and sophistication.

One primary objective of bad actors is to gain unauthorized access to sensitive information and systems. They can craft convincing phishing emails or other legitimate communication forms by leveraging AI. This enables them to deceive individuals and trick them into revealing their credentials or unknowingly downloading malware.

Another motivation for bad actors is identity theft. AI-generated attacks allow them to create personalized phishing emails that appear to be from reputable sources. By imitating the writing style and language patterns of specific individuals or organizations, they increase the likelihood of success in obtaining sensitive personal information.

Moreover, bad actors may engage in AI-generated attacks with the goal of financial fraud. By exploiting the capabilities of AI and language models, they can create counterfeit messages or documents that appear authentic. This enables them to manipulate individuals or organizations into financial transactions that benefit the perpetrators.

Techniques Used in AI-Generated Texts

AI-generated texts employ various techniques to create convincing and realistic content for malicious purposes. Natural language processing (NLP) is a commonly used technique that enables AI models to understand and generate human-like text. NLP techniques analyze patterns in language, allowing attackers to craft persuasive messages.

Generative AI tools are another technique used in AI-generated texts. These tools leverage machine learning to generate text that mimics human writing styles. By training on vast amounts of data, generative AI tools can create content that appears authentic and convincing.

To exploit vulnerabilities in language models, attackers employ different strategies. They can input specific prompts or cues to manipulate the generated text. For example, attackers can craft prompts that prime the model to generate content related to online banking or password resets, increasing the likelihood of successful phishing attempts.

Attackers also leverage the imperfections in language models to their advantage. These models produce grammatical errors or inconsistencies, miming the imperfections found in genuine communications, making the generated content seem more realistic.

Examples of AI-Generated Attacks

Artificial intelligence (AI) and natural language processing (NLP) have opened up new avenues for threat actors to carry out sophisticated cyber attacks. These attacks are made possible by leveraging AI-generated text to deceive and manipulate unsuspecting victims. One example of such attacks is using AI-powered tools to create convincing phishing emails. Attackers can train language models to generate personalized emails that mimic legitimate messages' writing styles and content. By leveraging AI's ability to create authentic-looking content, these emails can trick individuals into clicking on malicious links or disclosing sensitive information.

Another example is using generative AI tools to develop fraudulent activities such as identity theft. By training these models on vast amounts of data, attackers can generate fake identities and create convincing online profiles for nefarious purposes. These examples demonstrate the potential threats posed by AI-generated attacks and the need for enhanced security measures to combat them.

Phishing Emails with Malicious Intent

Phishing emails with malicious intent are a common tactic used by threat actors to exploit unsuspecting recipients and gain access to sensitive information. These emails are crafted to deceive individuals and trick them into divulging personal or confidential data such as login credentials, credit card details, or other sensitive information.

Threat actors employ various techniques to create convincing phishing emails. They often mimic legitimate emails from trusted sources like banks, utility companies, or popular online platforms. These emails are designed to appear genuine, complete with official logos and formatting, to deceive recipients into believing they are legitimate.

The impact of falling victim to a phishing email can be significant. Once the threat actor gains access to sensitive information, they can use it for various nefarious purposes, such as identity theft, financial fraud, or unauthorized access to protected systems or accounts.

To protect against phishing attacks, individuals and organizations must adopt proactive measures. This includes educating users about the dangers of phishing emails, implementing multi-factor authentication, regularly updating security software, and using spam filters to identify and block suspicious emails.

In conclusion, phishing emails with malicious intent pose a significant threat to individuals and organizations. To mitigate the risk of falling victim to these exploitative tactics, it is crucial to remain vigilant and exercise caution when dealing with emails, especially those requesting sensitive information.

Multi-Factor Authentication Bypasses

Multi-factor authentication (MFA) is an essential security measure that helps protect against unauthorized access to accounts or systems. It adds an extra layer of security by requiring users to provide more than one type of authentication, typically a combination of something they know (like a password), something they have (like a security token), or something they are (like a biometric authentication).

However, with the advancement of artificial intelligence (AI), threat actors have found ways to bypass multi-factor authentication. AI-based tools can mimic legitimate user behavior and generate convincing authentication requests that trick users into providing credentials. These tools can analyze previous authentication requests and create variations that closely resemble legitimate ones, making it difficult for users to differentiate between genuine and malicious requests.

Additionally, generative AI tools can create realistic phishing attempts that target users with personalized content, such as emails that appear to come from a trusted source. These AI-generated attacks can bypass MFA by exploiting human vulnerabilities, such as curiosity or urgency, to deceive users into providing their credentials willingly.

To combat these AI-generated attacks, organizations must stay updated with the latest AI-based security tools to detect and mitigate such threats. These tools use advanced machine learning models to analyze patterns and identify indicators of compromised or fraudulent activities. Regularly educating users about the risks of phishing attacks and encouraging them to remain vigilant when providing their credentials can also help prevent MFA bypasses.

In conclusion, while multi-factor authentication is a robust security measure, AI-generated attacks pose a significant challenge. Organizations and individuals must adopt advanced security tools and remain cautious to avoid falling victim to these sophisticated attacks.

Convincing Fraudulent Emails

Convincing fraudulent emails are designed to deceive recipients into believing they are legitimate, thus increasing the likelihood of users falling victim to phishing attacks. These emails employ various characteristics and techniques to appear authentic and trustworthy.

One technique commonly used is personalization. Machine learning algorithms analyze vast amounts of data to create personalized messages that mimic a trusted source's style and language patterns. These AI-generated phishing emails can appear more credible and convincing by tailoring the content to the recipient's preferences or recent activities.

Attack Methods Used by Ai-Generated Attacks

AI-generated attacks employ various methods to exploit vulnerabilities, deceive systems, and cause damage or compromise trust. Two common attack methods used in these attacks are input attacks and poisoning attacks.

Input attacks involve manipulating the input data that is fed into AI systems. The goal is to deceive the system by introducing malicious inputs that result in unintended or harmful actions. For example, an AI-generated attack could cause a self-driving car to ignore stop signs or take incorrect steps by feeding it manipulated sensor data.

Poisoning attacks, on the other hand, involve maliciously manipulating the training data used by AI models. By injecting false or misleading information, attackers can bias the model towards making wrong predictions or behaving unexpectedly. For instance, an AI-generated attack might manipulate the training dataset of a content filter to allow inappropriate content to bypass detection.

These attack methods can cause significant damage and have far-reaching consequences. A successful input attack on an autonomous vehicle can jeopardize the safety of passengers and pedestrians, leading to accidents and injury. Similarly, a poisoning attack on a content filter can result in disseminating harmful or inappropriate content that goes undetected, potentially harming users or degrading online spaces.

Furthermore, AI-generated attacks can erode trust in AI systems and undermine the integrity of those systems. By exploiting their vulnerabilities, attackers can doubt the reliability, safety, and effectiveness of AI-enabled tools and techniques, leading to a loss of confidence among users and cybersecurity professionals.

These examples highlight the potential threats and risks associated with AI-generated attacks and emphasize the importance of implementing robust security measures and continuously monitoring and updating AI models to defend against such attacks.

Why Traditional Solutions Are Inefficient

There are a few key reasons why traditional detection and signature-based security solutions struggle to detect AI-generated cyber attacks:

- Focus on known patterns - Signature, heuristics, and supervised ML models rely on identifying attacks that match pre-defined rules, patterns, or labeled training data. AI can automatically generate novel attacks that don't match these static indicators.

- Susceptibility to evasion - Attackers leverage AI to iteratively morph malware, phishing content, and network traffic just enough to avoid triggering static thresholds and rules while still achieving goals. Defenses are evaded.

- Lack of generalization - Models that memorize finite samples or rules don't generalize well to detect new types of attacks. AI generates virtually unlimited fresh attacks.

- Data isolation - Models are trained only on limited samples of threat data from a single organization. AI attackers continuously evolve based on successes across industries.

- Blind trust in predictions - Models provide alerts without context or explanation of how predictions are made. Analysts tend to trust this opaque output, while AI attackers find blind spots.

- Inability to adapt quickly - Updating traditional systems requires frequent manual effort and recoding to incorporate new threats. AI attackers innovate automatically.

- Focus on prevention, not deception - Rules and models look for harmful payloads and actions. AI allows attackers to disguise intent with dynamically generated benign decoys.

- In summary, AI-empowered attackers can run circles around traditional defenses due to advantages in scale, automation, and adaption. Matching AI's capabilities on offense requires similar advances on defense.

- In addition to personalization, grammar, spelling, and contextually relevant information make these fraudulent emails seem legitimate. Malicious actors leverage machine learning algorithms to improve the overall quality of the email, reducing grammatical errors and ensuring that the message aligns with the context of previous communication or recent events.

By incorporating these elements into their attacks, threat actors can create convincing fraudulent emails that are increasingly difficult for recipients to spot as malicious. To defend against these sophisticated techniques, individuals and organizations need to maintain a high level of skepticism when receiving unsolicited emails, double-check the sender's identity, and use security tools that can identify and block these fraudulent messages.

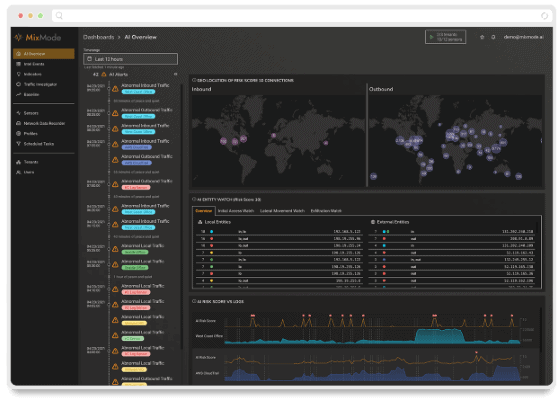

MixMode: Generative AI Built to Defend Against AI Generated Attacks

The MixMode Platform is the only generative AI cybersecurity solution built on patented technology purpose-built to detect and respond to threats in real-time, at scale, delivering.

- Continuous Monitoring: Continuously monitor cloud, network, and hybrid environments

- Real-time Detection: Detect known and unknown attacks, including ransomware.

- Guided Response: Take immediate action on detected threats with remediation recommendations.

MixMode’s generative AI is uniquely born out of dynamical solutions (a branch of applied mathematics) and self-learns an environment without rules or training data. MixMode’s AI constantly adapts itself to the specific dynamics of an individual network rather than using the rigid legacy ML models typically found in other cybersecurity solutions.

With MixMode, security teams can increase efficiencies, consolidate tool sets, focus on their most critical threats, and improve overall defenses against today’s sophisticated attacks.

MixMode’s patented self-learning AI was designed to identify and mitigate advanced attacks, including adversarial AI. An adversary would need to deeply understand MixMode’s algorithms and processes to evade detection. However, in attempting to learn and replicate MixMode's AI, the adversary's behavior would likely be detected as abnormal by the platform, triggering an alert and preventing further damage.

Reach out to learn more about how we can help you defend against AI-generated attacks.