What is Generative AI?

Introduction

Generative AI has emerged as a promising solution to enhance cybersecurity defenses. This subset of artificial intelligence utilizes machine learning algorithms to generate new data. In cybersecurity, generative AI can detect patterns and anomalies in data, improving threat detection capabilities. However, it is essential to understand both the positive and negative aspects of utilizing generative AI in cybersecurity.

What is Generative AI?

Generative AI is an emerging and exciting field in artificial intelligence technology. It can produce content such as text, graphics, audio, and synthetic data with a simplified user interface. While this technology may be a breakthrough on the surface, it has been around since the 1960s as part of chatbots. However, with the introduction of Generative Adversarial Networks (GANs) in 2014, generative AI became much more powerful when creating convincing visuals and audio. This technology has enabled many potential opportunities, such as improved video dubbing and educational content of all kinds, both possibilities that were only achievable with generative AI before now.

The advancements in generative AI come with many potential rewards. It could be used to create interactive learning content for students to present engaging visual representations with sound, which isn’t currently possible using traditional methods. Businesses have also taken notice of this innovative technology. They are finding new applications like automated customer service agents equipped with natural language processing abilities enabled by GANs. Ultimately, however, generative AI holds immense potential for various industries.

How does it work?

Generative AI is a form of artificial intelligence used to create digital content using prompts such as text, images, videos, designs, or musical notes. It engages various AI algorithms to return new content in response to the submitted prompt - whether it be advanced essays on a particular topic, solutions to complex problems, or deep fakes created from real-world pictures and audio of individuals.

In the past, developers had to learn to use several tools and specialized coding languages like Python to submit data via API or other methods. But now, with the growth of generative AI technology, companies are creating user interfaces that allow people to submit requests using plain language rather than technical jargon. This makes it simpler and more accessible for beginners and experienced users alike to benefit from this type of machine learning. The potential applications for generative AI are seemingly limitless as more industries begin leaning into this technology daily.

What are Generative Models?

Generative AI models are increasingly used to create realistic representations of the world. Developers can generate new content from a user's query or prompt by combining various algorithms with images, such as natural language processing techniques. These models encode the various biased and inaccurate aspects of data, so caution should be taken when creating and utilizing them. Additionally, neural networks such as generative adversarial networks (GANs) and variational autoencoders (VAEs) have become very popular for tasks such as generating human faces, creating synthetic data for AI training, or even imitating particular people.

The various capabilities of generative AI models are incredibly empowering yet simultaneously come with challenges associated with accuracy and biases. Developers must take extra precautions to ensure that the created content accurately reflects what is intended and does not perpetuate any underlying biases. While these models provide compelling tools for evaluating data creatively, they must be used responsibly to create meaningful results that benefit humanity without propagating potentially harmful misperceptions.

How is Generative AI applied to cybersecurity?

Generative artificial intelligence (AI) has practical applications in multiple industries, including cybersecurity. It is a subset of AI that can generate new data, graphics, or text by analyzing patterns and training data. When deployed effectively, artificial intelligence can revolutionize threat identification and response for security teams.

The availability of generative AI tools and the growing capabilities of nation-state actors have resulted in more personalized and convincing cyber threats. In the current digital environment, human defenders must work with AI to improve cyber resilience and minimize potential disruptions to businesses caused by algorithmic conflicts.

Positive Use Cases in Cybersecurity:

Generative AI can be used in cybersecurity to enhance security operations by automating tasks, reducing noise, and prioritizing threats. This can help organizations combat the ever-evolving cyber threat landscape effectively.

- Generative AI can analyze historical security event data to predict the behavior of potential attackers. This data can be used to improve security controls and bolster defenses against future attacks.

- Penetration testing and red teaming involve generative AI to create attack simulations that accurately replicate the techniques employed by actual attackers. This enables security experts to evaluate the efficiency of their defenses, identify any weaknesses, and strengthen their overall security position.

- Automatically generating fake phishing emails, texts, and phone calls improves security awareness training and makes it more realistic.

- Simulating new types of malware and attack variants to expand datasets used to train defensive AI systems.

- Automatically creating fake database records, system logs, and network traffic to test detection systems with a diverse set of never-before-seen data.

- Rapidly prototyping new ideas for exploits, payloads, and obfuscation to stress test systems and find weaknesses before adversaries do.

- Automating the documentation of repetitive policies, controls, and processes to save analyst time.

- Responding to analysts' natural language queries with detailed contextual explanations and answers.

- Summarizing long vulnerability reports and security documents into concise, actionable insights.

- Developing new security tooling and scripts by generating code based on high-level concepts described in plain language.

- Producing realistic adversarial content like fake social media profiles and blog posts for threat intelligence research.

Limitations and Risks:

- Could generate harmful content like fake news if misused for propaganda or disinformation.

- Automated phishing and social engineering content risks accidentally harming people if released irresponsibly.

- Generated exploits and malware could lead to dangerous uses if they fall into the wrong hands.

- AI-generated content may contain biases, errors, or lack nuance compared to human creations.

- Widespread use could lead to an "AI arms race," escalating generative capabilities among attackers and defenders.

- Malicious use could automate spear phishing, social engineering, and other customized attacks.

- Generates security risks themselves (e.g., training data poisoning, adversarial examples).

- Could automate the creation of convincing fake media used to spread disinformation.

- Accelerates capability gap between well-funded criminals/nation-states and defenders.

- Explainability challenges increase with generative models compared to analytical AI.

- Potential to overwhelm humans with more data, alerts, and content than they can handle.

What are large language models, and how are they applied in cybersecurity?

Large language models (LLMs) are deep-learning artificial intelligence systems trained on massive text datasets to understand and generate natural language. The key characteristics of LLMs are:

- Scale - Trained on billions or trillions of words to learn nuances of human language.

- Architecture - Use transformer neural networks that capture long-range dependencies in text.

- Representation - Develop high-dimensional vector representations of words, sentences.

- Generation - Can generate new coherent, human-like text for a given prompt.

- Self-supervision - Many are trained using self-supervised learning without labeled data.

LLMs are being applied in cybersecurity in various ways:

- Log analysis - Processing vast volumes of unstructured security log data to extract insights.

- Document comprehension - Reading and summarizing security policies, reports, and threat intel documents.

- Natural language queries - Allowing analysts to ask questions in plain language and get answers.

- Email scanning - Analyzing phishing campaigns and malware attachments.

- Report generation - Automating narrative report and visualization creation.

- Threat forecasting - Predicting emerging attack techniques based on curated threat intel.

- Adversarial simulation - Generating synthetic malicious content like phishing emails to stress test defenses.

- Automating repetitive tasks - Handling triage, alert processing, and maintenance.

LLMs apply deep learning to interpret, generate, summarize, and reason about language to augment cybersecurity operations. Their flexibility enables many security applications.

What is the connection between generative ai and large language models?

There is a close connection between generative AI and large language models (LLMs):

Generative AI refers to machine learning models that create new data samples and content as output. This includes things like images, audio, video, and text.

Large language models are generative AI specialized for generating natural language text.

LLMs like GPT-3, DALL-E, and Codex are trained on massive text datasets, enabling them to generate remarkably human-like text for a given prompt.

LLMs' huge dataset size and transformer neural network architecture allows them to effectively learn the underlying structure and semantics of the human language.

This allows LLMs to generate new coherent text customizable for different applications.

LLMs' text-generation capabilities make them a foundational technology for many types of generative AI when language output is needed.

Large language models represent a uniquely capable subtype of generative AI that excels at producing human-quality text. Their natural language generation powers enable using LLMs for various generative applications spanning content creation, summarization, translation, conversational agents, and more.

LLMs exemplify generative AI while fueling advancements in other areas of generative technology. Their symbiotic relationship continues driving generative AI capabilities forward.

What's the connection between large language models and chatgpt?

ChatGPT is a specific chatbot application created by Anthropic that is powered by a large language model (LLM) in the background. There is a direct relationship between ChatGPT and LLMs:

ChatGPT is built on top of Anthropic's LLM, Claude, trained on massive text datasets.

Claude was tuned using Constitutional AI to make its responses helpful, harmless, and honest.

But the core natural language generation capabilities of ChatGPT are enabled by the huge scale and deep learning architecture of the Claude LLM.

ChatGPT's ability to understand context, allow follow-up questions, and provide remarkably human-like conversational responses all stem from the capabilities of its underlying large language model.

The Claude LLM training process equips ChatGPT to answer questions, hold dialogs, and generate text across many topics.

ChatGPT effectively provides an interface to interact with the Claude LLM in a conversational chatbot format.

Improvements to ChatGPT will be driven by ongoing development of the Claude LLM's size and training methodology.

ChatGPT represents a state-of-the-art application made possible by advances in large language models like Claude. The natural language prowess of LLMs is foundational to the human-like chat abilities ChatGPT demonstrates. Their connection highlights the growing real-world impact of LLMs.

Conclusion

Generative AI has the potential to revolutionize the field of cybersecurity by enhancing threat detection capabilities. However, it is essential to address the limitations and risks associated with its use.

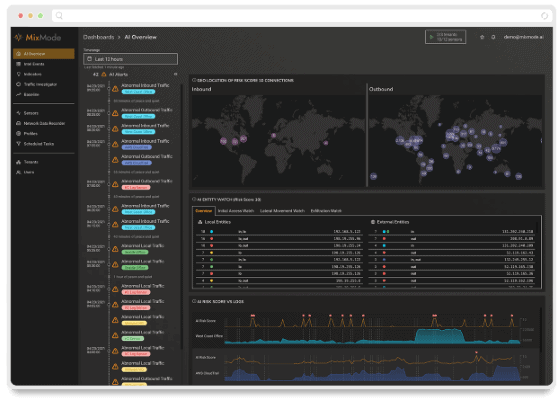

MixMode, with its Third Wave AI technology, offers a dynamic threat detection platform that goes beyond traditional generative AI solutions. MixMode's AI provides unparalleled threat detection capabilities and strengthens cybersecurity defenses by continuously learning and adapting to changing situations.

Generative AI-enabled security solutions can significantly enhance security operations by automating tasks, reducing noise, and prioritizing threats as the threat landscape evolves. However, it is crucial to understand the potential vulnerabilities and take measures to prevent malicious and inappropriate applications.

By utilizing generative AI in cybersecurity, organizations can keep up with malicious actors to combat security threats and the ever-evolving cyber threat landscape effectively and stay ahead of the curve.