Delivering Generative AI to Cybersecurity for Over 3 Years

“Don’t call it a comeback. I’ve been here for years!” – LL Cool J

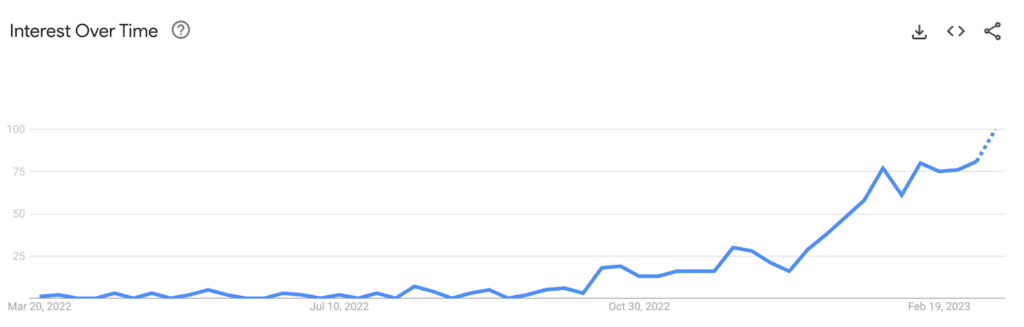

Said best by LL Cool J, the flavor of today isn’t always new. Generative AI, led by ChatGPT and others, is having a hype cycle at a level not seen in years. Yet, Generative AI isn’t new, per se. A predecessor of ChatGPT was a chatbot called Eliza way back in the 1960s. What is new though, is the sophistication and capabilities of these modern systems to deliver value to users at a quality and scale that is truly groundbreaking.

A common definition of Generative AI

“Generative artificial intelligence (AI) describes algorithms (such as ChatGPT) that can be used to create new content, including audio, code, images, text, simulations, and videos.” – McKinsey

What’s different now is that this content creation is happening almost instantly and the quality is high enough that the user can actually use the results, rather than them being just a novelty or party trick.

Said another way, we now have AI systems that can provide a demonstrable Return on Investment (ROI) on using a tool to provide content creation vs being made via a more traditional means. And that ROI is now orders of magnitude higher than one could have hoped for.

For example, you can get your term paper written in 5 seconds instead of 5 weeks, or your portrait from 10 selfies rather than waiting 10 weeks to schedule a professional photographer.

There is no question the new type of tools to come from these abilities will radically change many industries. Ranging from conquering new unsolved problems or radical productivity gains on everyday tasks of experts.

So, what does this mean for Cybersecurity?

The negative implications of tools like ChatGPT have already been covered broadly at this point. It is extremely likely the abilities of these tools will be used by bad actors in their attacks with more advanced phishing techniques, accelerating building cyber attacks on new vulnerabilities

When it comes to Cybersecurity and Generative AI, you have to look at what traditionally takes time and investment by expert practitioners. One of the major things SOC analysts have to build and maintain is an alerting framework for their environment. It is not uncommon for teams to take months to even years (!) to deploy security tools into an environment and then they have to constantly maintain rules, thresholds and playbooks. This tedious, labor-intensive approach to protecting business assets and operations is far from optimal.

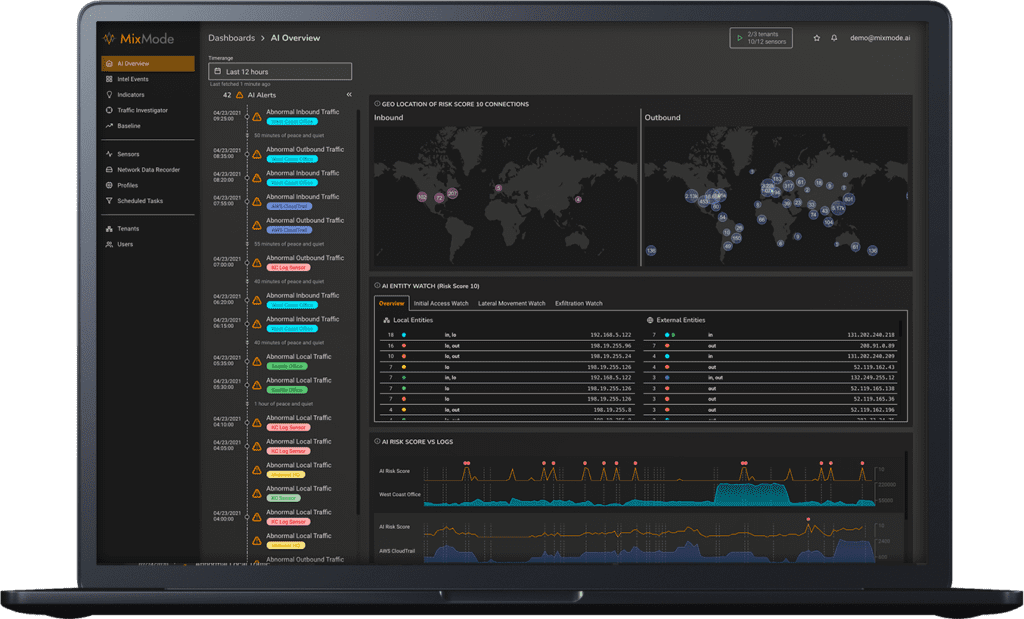

We launched MixMode over 3 years ago with a Generative AI designed to autonomously build the alerting framework for SOC teams, with greater accuracy and timeliness than the legacy rules-based tools which require so much human maintenance and are not capable of detecting novel attacks. Deployed immediately, security teams no longer have to port or write rules when standing up their Cybersecurity framework. This Generative AI uses a self-learning approach to automatically understand what’s expected and to build the alerting capabilities and also sort the alerts from any rules and IOCs provided by the team, to give full security coverage with the least amount of effort.

When looking at what tools will knock you out, keep in mind that the ROI for Generative AI should be demonstrable and easy to understand. Reach out to our AI experts to set up a demo today.