Yann LeCun and Yoshua Bengio were recently interviewed by VentureBeat Magazine on the topics of self-supervised learning and human-level intelligence for AI. Our CTO Dr. Igor Mezic sat down with our team to discuss some of the most interesting pieces of the LeCun article, and offer a potential solution to a search for truly self-supervised AI.

According to Turing Award winners Yoshua Bengio and Yann LeCun, supervised learning entails training an AI model on a labeled data set, and LeCun thinks it will play a diminishing role as self-supervised learning comes into wider use:

VentureBeat: “Instead of relying on annotations, self-supervised learning algorithms generate labels from data by exposing relationships between the data’s parts, a step believed to be critical to achieving human-level intelligence,” said LeCun in VentureBeat.

Question to Dr. Mezic: Do you agree with LeCun that Supervised Learning will become more and more arbitrary as Self-learning comes into the picture? Why?

Dr. Igor Mezic: Yes, I certainly agree with that. In my mind Supervised Learning was never really a reflection of true learning the way humans do it because we do not use a lot of labeling — we are not fed a lot of things to learn. We do learn very quickly, within 24 hours we can learn to drive a car. And that’s essentially because we have a predefined model of motion in our brains, so it’s generative. We have a model that can tell us, “Well, if i do this then I expect this object to move this way.”

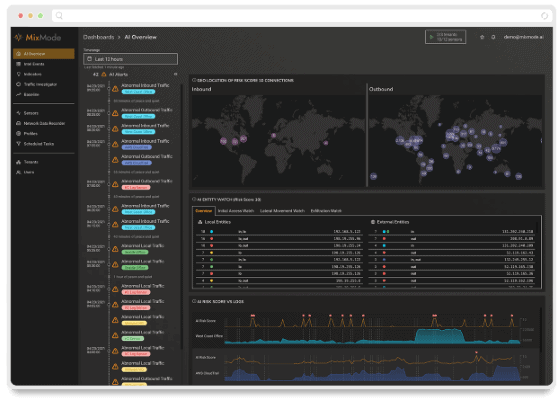

I have not worked on supervised learning methods because I don’t believe they yield the results we need in the development of AI. We have focused on self-supervised methods which we have put into action for several years now at MixMode. LeCun and Bengio say they hope to see commercial unsupervised learning in the future but it is already available today at MixMode. Our system learns using a very limited amount of additional information and is based on an existing generative model to predict the future and suggest action. So we’ve already put into practice, in a limited form, technology they are advocating for the future.

VentureBeat: “Most of what we learn as humans and most of what animals learn is in a self-supervised mode, not a reinforcement mode. It’s basically observing the world and interacting with it a little bit, mostly by observation in a test-independent way,” said LeCun. “This is the type of [learning] that we don’t know how to reproduce with machines.”

Q: Why can’t you reproduce this learning with machines? Why is this type of learning more beneficial?

Dr. Mezic: I actually disagree with that statement. I think we can, and we were able to many times in the past. The keywords are “generative models”. There is one key aspect of Supervised Learning that was absolutely wrong from the beginning — it never considered time as a dimension, and when you take out time you remove causality, leaving you left only with correlation.

Causality is about time. If you only have correlations you need a lot of labeling. But if you have causality, then you realize that a cat can only move in a certain way. Causally, if it’s here at this point in time it can only be in a limited number of places in the next point in time. However, if you do just pure correlation you’re feeding your network pictures of cats in various positions and they’re not causally related to each other, which means your neural network never learns the dynamics. This problem was somewhat alleviated by the invention of the convolution of neural networks that LeCun pioneered. The issue with his method is that it does not take in the causality that is brought in by time. In my work, I started with time as a dimension and knew that if I wanted causality — if I want generation of dynamics from data that I have read before — I need to understand how things evolve in time, and that’s what MixMode’s technology is based on already today.

Q: Why start with time?

Dr. Mezic: We’re going to get really technical here, but the answer is actually quite simple.

Space is not homogeneous — you look around and there is a window there and a painting there. Time, however, is homogeneous unless you’re dealing with relativity theory where it gets kind of weird. Generally, time is the same for you as it is for me. But, interestingly, to represent time, it requires a really specific mathematical structure. So if it’s completely homogeneous, meaning we’re all perceiving it the same way, it actually induces a pretty simple mathematical structure on evolution. With space you have to represent a lot of things in many different ways; in time there is basically one way. It’s that simple.

If you really want to draw these nice correlations you can turn to why people like music, for example. My explanation as to why is because you get these same periodicities that we also perceive in daily life, like the earth spinning around its axis and the sun rising. Those periodicities that we all perceive are also in music, and there’s something that our brain likes about that because it uses it to “perceive” or “predict” what’s coming next in a song or that the sun is going to set tonight.

You can feel it coming and there is a certainty about repeated regularity. It exists in time but it does not exist in space. In space, everything is random and different. The neural network deep learning community never paid much attention to the role of time in causality and the specific mathematical structure that you need to impose to represent that causality in time. My own research started from that regularity, so LeCun and I came to the same point of learning from two totally different points of view. LeCun approached it as trying to copy certain structures in the human brain and not pay attention to the causality. I, however, didn’t care what the structure was because I just wanted to understand the causality. Once I understand the causality I can learn the underlying structure in other dimensions by neural networks or anything else I want. This is what is missing from the current research and it has been missing since the beginning.

VentureBeat: “Distributions are tables of values — they link every possible value of a variable to the probability the value could occur. They represent uncertainty perfectly well where the variables are discrete, which is why architectures like Google’s BERT are so successful. Unfortunately, researchers haven’t yet discovered a way to usefully represent distributions where the variables are continuous — i.e., where they can be obtained only by measuring.

LeCun notes that one solution to the continuous distribution problem is energy-based models, which learn the mathematical elements of a data set and try to generate similar data sets. Historically, this form of generative modeling has been difficult to apply practically, but recent research suggests it can be adapted to scale across complex topologies.”

Q: Do you agree with LeCun’s theory of energy-based models? How could this be done in a real-life scenario?

Dr Mezic: The theory utilizes ideas that physicists call “statistical mechanics”. Statistical mechanics is a very successful part of physics because it can tell you, for example, what the volume of water is if you know its temperature and pressure. But those equations are static and they don’t take time into account. So, from my perspective it’s the generation of a dynamical model which takes into account the causality of time that can advance AI.

Just think about the classic security situation in which you have someone attempting to hack into your system through credential stuffing, where where they get a list of all of your usernames and passwords from the dark web and try putting all of them in an automated manner and hack you because you used the same password on two sites. This is happening all the time now that everyone is working from home with COVID-19.

So how useful is it to learn all of the labels of breaches in the past if something like this has never happened in the past? The answer is – you’re not going to catch it. If you are able to see a spike in the volume of attempts to login in the system, over time, you’re going to immediately recognize that as something you need to worry about.

Even the simplest systems for that type of detection say, “this user has logged in four times so we need to block their account.” Even these systems consider time first. And they are actually quite successful — it’s not deep learning. They’re just taking into account the amount of times someone has tried to log in over a short period of time.

Mathematically, these energy-based models are cool, but from a practical point of view I don’t see how they solve a problem.

VentureBeat: “Another missing piece in the human-level intelligence puzzle is background knowledge. As LeCun explained, most humans can learn to drive a car in 30 hours because they’ve intuited a physical model about how the car behaves. By contrast, the reinforcement learning models deployed on today’s autonomous cars started from zero — they had to make thousands of mistakes before figuring out which decisions weren’t harmful.

“Obviously, we need to be able to learn models of the world, and that’s the whole reason for self-supervised learning — running predictive models of the world that would allow systems to learn really quickly by using this model,” said LeCun. “Conceptually, it’s fairly simple — except in uncertain environments where we can’t predict entirely.”

Dr. Mezic: This is key – we have a system like that already in place here at MixMode today. We have a system that takes into account precisely the uncertainty of, “If I send an email at 3pm or 4:45 pm those two actions shouldn’t be different to an analyzing AI system. The AI system should recognize that there is an uncertainty in when I’m going to send the email and not learn 3:30 pm because it’s going to be different next time. Deep learning systems are well known to do what is known as overfitting, meaning that if I send an email at 3 pm it’s going to try to learn that, and you don’t want it to because you’re not going to send every single email at 3 pm.

It’s not the uncertainty that you don’t want to learn but what the general patterns are. That’s precisely what our MixMode platform does. It learns the general pattern that can be predicted and then it also parameterizes that uncertainty in a way that’s mathematically very efficient. So our computational cost is super small for something like this. I recall that previously LeCun said things are not scalable if you use energy models. I believe that if you do understand that dimension and what’s coming, you actually do get scalable models.

VentureBeat: “I think that’s a big advantage for humans, for example, or with respect to other animals,” he said. “Deep learning is scaling in a beautiful way, and that’s one of its greatest strengths, but I think that culture is a huge reason why we’re so intelligent and able to solve problems in the world … For AI to be useful in the real world, we’ll need to have machines that [don’t] just translate, but that actually understand natural language.”

Dr. Mezic: What is natural language? Natural language for humans is probably a lot different from natural language for an AI system monitoring a network, and I think again we are falling into the trap of trying to replicate human intelligence. With human intelligence we have sensory inputs — visual, auditory, and tactile — networks that have completely different sensory inputs. So what is the language for that? The language has to be created by the machine itself and that is quite important because at the end of the day you want to communicate in the same language across the board, but it’s not a natural language like what enables you to talk to a human. The machine doesn’t need to have natural language. The machine shouldn’t talk to you in English, that doesn’t make any sense unless it’s specifically a robot built to speak in English. In a security setting it doesn’t make any sense to me.

Q: How about the idea that AI will only have the capabilities LeCun hoped to see “in the Future”. Do you agree?

Dr. Mezic: Certainly not entirely. This communication issue is important. I don’t think the solution should be natural language in the way a human thinks of it. You can certainly consider the theory of language the way that Chomsky does and certainly pick up some theories there. But, in a limited way, MixMode provides a scaled system that does this generative model-based learning today.

That’s precisely what our system does, and it’s very scalable because it does precisely what LeCun says — it does not try to learn everything from scratch when it’s presented with a new situation. It takes in everything that has happened before and tries to see whether there is enough difference for something to be wrong. That’s actually how humans learn too as we see certain things, we learn certain things, we feel like we understand our environments and then suddenly something new happens and we have to ask ourselves if we need to pay attention to it or not, and that depends on the model of the world we’ve already created. We ask ourselvers: “How different this is from what I’ve seen before?”

MixMode Articles You Might Like:

Why Training Matters – And How Adversarial AI Takes Advantage of It

Encryption = Privacy ≠ Security

Self-Supervised Learning – The Third-Wave in Cybersecurity AI

How the Role of the Modern Security Analyst is Changing

One Thing All Cybersecurity teams Should Have During COVID-19

The Cybersecurity Processes Most Vulnerable to Human Error

New Video: How Does MixMode’s AI Evolve Over Time With a Customer’s Environment?

New Whitepaper: How Predictive AI is Disrupting the Cybersecurity Industry