Unsupervised AI, also known as context-aware or third-wave AI (as defined by DARPA), is notoriously difficult to explain because there lacks an appropriate test to understand just how powerful the intelligence is.

For decades, we have relied on the Turing test to determine whether or not a computer is capable of answering questions like a human being. Developed by Alan Turing in 1950, the Turing test is a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.

Turing proposed that a human evaluator would judge natural language conversations between a human and a machine designed to generate human-like responses. The evaluator would be aware that one of the two partners in conversation is a machine, and all participants would be separated from one another. The conversation would be limited to a text-only channel such as a computer keyboard and screen so the result would not depend on the machine’s ability to render words as speech. If the evaluator cannot reliably tell the machine from the human, the machine is said to have passed the test. The test results do not depend on the machine’s ability to give correct answers to questions, only how closely its answers resemble those a human would give.

Wikipedia

Or in simpler terms: The computer is placed behind a curtain and has to answer questions posed by a human being. If the human cannot distinguish whether or not he is speaking to a computer or another person, then the AI has passed the Turing test.

Since that day, over half a century ago, the Turing Test has inspired countless competitions, philosophical debates, media commentary, and epic sci-fi plots from Westworld to Ex Machina—not to mention its fair share of criticism from academia.

There are a number of systems that have surpassed the capabilities of the Turing Test as an accurate method of measuring machine intelligence, however none are as widely accepted as Turing’s.

Why the Turing Test is no longer enough to test modern-day AI

Detractors argue that the Turing Test (among other criticisms) measures just one aspect of intelligence: language.

A single test for conversation may do well for chatbot AI testing, but neglects the wide range of tasks and tests that AI scientists and researchers have been working on to improve unsupervised AI holistically in terms of reasoning, learning and decision-making processes.

Evaluating context-aware AI is arguably many levels above the Turing test. Context-aware AI (or unsupervised or third-wave…all can be and will be used interchangeably), not only answers questions as a human would, but it can thoughtfully come up with its own questions to ask in response, which is why it is so purposeful when applied to cybersecurity.

First or second-wave cybersecurity AI only looks for past patterns in current data, which isn’t helpful when the enemy is constantly adapting. In contrast, unsupervised AI does the following: (1) studies and builds an ongoing baseline of a network and (2) uses advanced machine learning and AI, specifically generative models of behavior, to predict network behavior and creatively identify and surface new threats.

Like a musician coming up with a new melody after learning how to play a few songs, the melody is completely new and different but sounds good because it is formulated with the knowledge of how a song structure works and how notes go together. The melody is a metaphor for context-aware AI in the context of cybersecurity: it will study the past deviations and instead of just looking for those in the future, it will come up with its own ideas for what else could possibly be harmful to the network.

That’s the benefit of unsupervised AI: to recognize various deviations in the past and come up with brand new things that could be harmful in the future based on that past data, creating a pattern out of a pattern, rather than coming up with rules based on the previous pattern.

Another benefit to unsupervised AI is the lack of need for training, heavy maintenance and human supervision. Unlike most AI which must be trained by a human or given a set of rules to learn by, unsupervised AI can understand a system and continuously learn about changes within a system without the need for human tuning or rules-based learning.

How unsupervised AI can help improve cybersecurity

A dangerous new trend in the network threatscape is that hackers are using machine learning tools to break into systems. Take a common tactic, phishing, for example:

The problem of phishing is well known at this point, but the rise of artificial intelligence in this realm is perhaps less understood. AI-fueled techniques are lower cost, higher accuracy, with more convincing content, better targeting, customization and automated deployment of phishing emails.

The bad news about AI for cybersecurity: Hackers have access to the same tools as hospitals, Healthcare IT News

One of the things bad actors do, for example, is change the copy of messages just slightly so they don’t all sound the same or get caught by spam folders. It is ruled-based machine learning, where you have a natural language structure and the rule will tell you to change one word which wont affect or break down the whole sentence.

To be protected from these kinds of attacks its important to have context-aware AI that will not only look at past problems and come up with rules to thwart the same kind of attacks, but constantly be coming up with new ideas of what to look for before those hacks happen.

As Dr. Igor Mezic, Chief Scientist at MixMode, shared in his previous article, 5 Reasons Why Context-aware Artificial Intelligence (Caai) Is Needed in Cybersecurity, here are the five reasons why context-aware artificial intelligence is a necessary step forward to enable applications of AI in network security:

1 – False Positives: in the current deployment of (first- and second-wave) AI systems, network security analysts are plagued by false positives and fear false negatives. A CAAI system is needed to minimize both of those. This new system also needs an understanding of the network security team resources and to learn from their actions.

2 – Big data: The problem of false positives is amplified by the fact that modern corporate networks have large numbers of IP addresses. The internal traffic between source and destination pairs – which scales as the number of IP addresses squared – also produces a large volume of temporal data. It is difficult, even for an experienced analyst, to understand the traffic between all the different parts of the network, as well as its context in this big dynamic data realm. This large amount of data needs to be reduced to actionable intelligence by the CAAI system for the network analyst.

3 – Predictive ability: The flip side of the coin of big data is that it enables AI with predictive capability, provided it can understand the context of the dynamics on the network. Current systems act in a reactive mode: they alert as something of large magnitude happens. A CAAI system can alert based on a small amount of activity that leads to a large magnitude action sometime later.

4 – Metadata: Metadata is important. Was this scheduled activity? Did this occur before, in the same context? CAAI needs to take into account not only the nature of the network data but also the context of the situation.

5 – Human-machine interaction: A system that does not learn from past false positives and context, both local and on other networks, is spamming the network analyst. In contrast, a learning AI-based system reduces spam over time. We need an interactive AI environment — where human-machine interaction occurs seamlessly and learning is enabled both ways. The autonomy level of the system is important. At the beginning, the user might set level 2 autonomy, where much of the monitoring and commands are provided by the user (such as in Tesla Autopilot) but over time steps up to level 3 or higher.

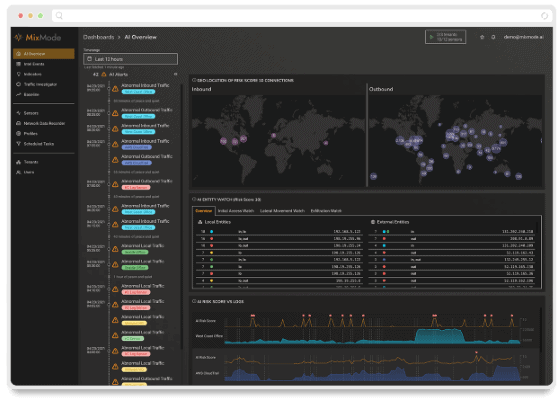

With 15+ years of experience delivering projects for DARPA and the DoD, MixMode’s third-wave AI studies the baseline of a network and uses unsupervised AI to creatively identify and surface new threats.

MixMode Articles You Might Like:

Featured MixMode Client Success Story: HighCastle Cybersecurity

Top 5 Ways AI is Making Cybersecurity Technology Better

What is Network Detection and Response (NDR)? A Beginner’s Guide

The Tech Stack Needed to Start an MSSP Practice: Firewall, SIEM, EDR and NDR

AI-Enabled Cybersecurity Is Necessary for Defense: Capgemini Report

Web App Security: Necessary, Vulnerable, and In Need of AI for Security