The 2020 Clearview AI data breach spawned hundreds of attention-grabbing headlines, and for good reason. The company works closely with law enforcement agencies and other entities by sharing personal information about millions of people, for a variety of purposes. The breach raised many questions about the vulnerability of personal data in general.

On the technical side of the breach, several key questions remain unanswered. Still, there is much to learn from a breach of this magnitude for any organization that handles private data.

Who is Clearview AI?

Clearview AI is a New York City-based tech company that creates facial recognition software that allows organizations to access over 3 billion photos stored in its database. The company counts more than 600 law enforcement agencies among its clients, including the FBI and the Department of Homeland Security. Clearview AI’s client list also includes companies that use the service for commercial activities.

Clearview uses artificial intelligence to acquire images from social media platforms like Facebook, Twitter, YouTube, Venmo, and LinkedIn. Police detectives and other investigators match photos of suspects against Clearview’s database to get location information and other data that can help them solve crimes.

What was the Clearview AI breach?

Clearview notified clients that it had experienced a data breach in early 2020. The breached data primarily affected law enforcement agencies, whose names were exposed publicly. Hackers also released information about the number of user accounts each client had opened and the searches they conducted.

Importantly, Clearview claims their massive image database and customer search histories were not part of the breach. Still, cybersecurity experts have questioned whether the company has reported the full extent of the breach. Clearview has been unwilling to disclose when the breach occurred, exactly how much information was stolen, or how hackers were able to gain access. The company stated only that a malicious actor had “gained unauthorized access.”

What can we learn from the Clearview AI breach?

We don’t have a solid understanding of Clearview’s security vulnerabilities at the time of the breach. However, the situation raises several questions that have potential widespread impacts on any organization that handles personal data.

1. Is “hacktivism” against corporations and organizations based on ethical issues on the rise?

Hacktivism is “the act of misusing a computer system or network for a socially or politically motivated reason,” according to the tech information portal TechTarget. Some industry analysts speculate that hacktivists targeted Clearview AI because they feel the company’s data gathering and sharing practices threaten civil liberties and the Constitutional right to privacy.

If ethical issues were at the heart of the Clearview attack, it wouldn’t be the first time. Several high-profile breaches have been ascribed to hacktivism in the past few years:

· Hackers published 26,000 email addresses and passwords stolen from adult entertainment website pron.com in 2011 in an apparent attempt to embarrass users.

· Hacktivist group Anonymous targeted the Church of Scientology through several methods, including a denial-of-service (DoS) attack in 2008.

· A hacker group called “The Impact Team” breached Ashley Madison, an extramarital dating platform in 2015, exposing the email addresses and other identifying information of 39 million users.

According to some industry experts, hacktivism may be on the rise alongside widely-reported events like the coronavirus pandemic. Hackers have targeted government and public health data related to the crisis.

2. What kind of sensitive data is more likely to attract bad actors?

In general, hackers are driven by activism as described above or target data they can turn into profit (or both).

Criminology expert Mike McGuire estimates that cybercrime generates more than $1 trillion in revenue annually. Hackers create multinational operations that can create enormous one-time profits or diversity across several small-scale enterprises.

A good rule of thumb is that data valuable to your company is also valuable to bad actors. There are hundreds of entities who will readily buy up data from hackers for wide-ranging purposes. Companies who need to gather, store, and utilize sensitive data must think carefully about how they publicize the extent of their data stores and establish best practices aimed at reducing the risk of data theft.

3. How can unsupervised learning prevent a personal data breach?

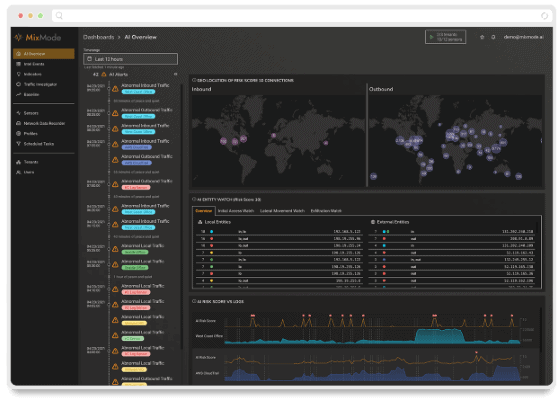

Beyond common-sense precautions, organizations can decrease data theft risks by bringing authentic unsupervised learning onboard. The Mixmode platform can predict thefts before they happen through context-aware AI. Best of all, Mixmode can create a baseline analysis of a network in just a few days, and then apply that knowledge to hone in on anomalies as they occur.

When it comes to data security, a proactive solution wins out over a reactive approach, every time. Learn more about how Mixmode can enhance network security through smart AI and set up a demo today.

MixMode Articles You Might Like:

New Video: Does MixMode work in the cloud, on premise, or in hybrid environments?

IDC Report: MixMode – An Unsupervised AI-Driven Network Traffic Analysis Platform

MixMode Raises $4 Million in Series A Round Led by Entrada Ventures

In Case You Missed It: MixMode Whitepapers & Case Studies

How a Massive Shift to Working From Home Leaves an Enterprise’s Cybersecurity Vulnerable

In Case You Missed It: MixMode Integrations of 2020

New Video: How does MixMode’s context-aware AI build a network baseline?