MixMode Forensic History

Historically, the MixMode platform has provided its users with a forensic hunting platform with intel-based Indicators and Security Events from public & proprietary sources. While these detections still have their place in the security ecosystem, the increase in state-sponsored attacks, insider threats and adversarial artificial intelligence means there are simply too many threats to your network to rely on solely intelligence-based detections or proactive hunting.

Many of these threats are sophisticated enough to evade traditional threat detection or, in the case of zero-day threats, signature-based detection may not even be possible.

In the face of this growing threat, the best defense is to supplement these traditional methods with anomaly detection, a term that is quickly becoming genericized as it is rapidly bandied about within the industry.

Here we will discuss some of the opportunities and challenges that can arise with anomaly detection as well as MixMode’s unique approach to the solution.

The Challenge of Cyber Security Anomaly Tracking

Dictionary.com defines an anomaly as “something that deviates from what is standard, normal, or expected.” This simple definition perfectly captures the concept that we are referring to in cybersecurity. With this definition in-hand, the first question becomes how to determine what is normal within a given network environment?

This notion of “normal” is commonly referred to as a “baseline” of expected activity and serves as the basis for determining the presence of an anomaly.

For many IT organizations, defining a baseline of normal behavior is quite challenging. The inherent difficulties range from incomplete logging, misconfigured systems or a lack of reporting across a variety of systems.

For example, some organizations may have extensive network performance data, but may be missing critical host information and are therefore unable to determine if increased network traffic is the result of a misconfigured or unauthorized device on the network.

For those that do have this data, the next challenge is how to collect and analyze it properly to provide a reasonably accurate representation of “normal” (the baseline) so that any deviations can be quickly and easily recognized. Again, for many organizations, this can be a difficult task since it can involve obtaining and processing large volumes of data from many different sources. In most cases, enterprises are faced with training this data into a system for 6-24 months.

The second issue presented, once our baseline is created, is structuring the data in such a way that it is possible to parse through the large volumes of data and recognize aberrations that may indicate a cybersecurity threat.

These two challenges are at the very forefront of the AI movement within cybersecurity.

Assuming we are going to use AI to create our baseline and determine the presence of anomalous activities, the question now becomes – which type of AI is best suited to the task?

For all intents and purposes there are 2 primary forms of Artificial Intelligence to be considered: Supervised Machine Learning and Unsupervised Machine Learning.

Let’s break down what each means and which we believe is best suited for network anomaly detection.

Supervised Machine Learning

Most cybersecurity tools on the market today use a form of supervised machine learning. Below is a typical process for Supervised AI learning and Anomaly detection where there are essentially six stages:

- Datasets, created by the third-party vendor, are fed into AI system.

- Data models are developed based on the vendor-created datasets – this is the aforementioned baseline.

- A potential anomaly is flagged each time a behavior deviates from the baseline.

- A security engineer evaluates the anomalies to determine validity.

- The AI takes this “feedback” and revises it’s baseline for future predictions

- The system continues to accumulate patterns based on steps 1 & 4.

Unsupervised Artificial Intelligence

MixMode utilizes a more advanced type of Artificial Intelligence that is unlike anything else in the market. Whereas most cybersecurity tools utilize the aforementioned supervised machine learning (leveraging static training data), MixMode’s AI is a form of unsupervised machine learning that uses an awareness of the network within which it is deployed (its context) to crete its baseline by learning what is normal for your specific network.

- The AI ingests a variety of inputs from the network on which it is deployed.

- The inputs (not training date) are used to form the data models upon which decisions are made.

- A potential anomaly is flagged each time a behavior deviates from the baseline.

- A security engineer evaluates the anomalies to determine validity.

- The AI takes this “feedback” as well as any changes to the inputs (on an ongoing basis) and revises its baseline for future predictions

- The system continues to accumulate patterns based on steps 1 & 4.

This matters because the method that a tool uses to create and learn its baseline directly impacts the quality of the decisions that it makes.

As discussed above, supervised learning creates its baseline (i.e. its understanding of what is normal) from training data that is created and provided by the cyber security vendor.

The supervised learning method has three primary limitations:

- Supervised learning is not able to adapt to dynamically changing attack signatures.

- Broad learning setting result in false positives, while narrow learning can lead to false negatives.

- Supervised learning systems are able to be manipulated by adversarial AI.

- It takes 6-24 months to train such a system, and then ongoing training and tuning to make it work reasonably well.

MixMode’s unsupervised artificial intelligence does not learn from training data. Unsupervised machine learning uses the very nature of the environment within which it is deployed to create the baseline upon with decisions are made. This method has three primary benefits:

- The AI is Capable of understanding its environment based on its own contextual information – without the need for tuning or vendor provided data.

- Unsupervised AI is particularly good at detecting events that it has never seen before (Zero Day activities & attacks).

- Unsupervised AI is not susceptible to manipulation by adversarial AI because it does not rely on signatures or attack vectors to recognize threats and anomalies.

- Unsupervised AI can learn and create a baseline of a network in a few days — far faster than the typical supervised system.

MixMode Introduces Unsupervised AI Indicators

With our August release, the MixMode platform is leveraging this propriety AI to deliver you a feed of AI anomalies in tandem with the more traditional intelligence-based alerting.

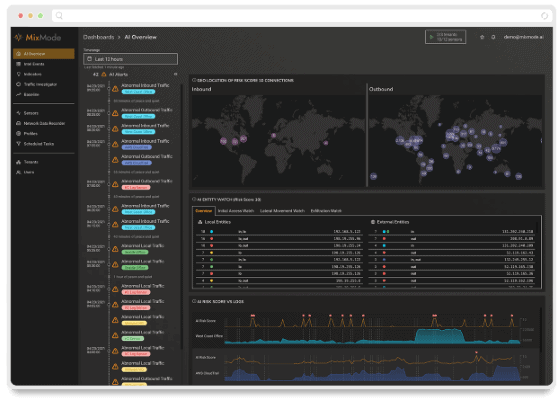

As you can see in the screenshot below, the main landing page in the MixMode user interface now provides you with an interactive timeline view of AI indicators in tandem with security events. Within the interface you can click and drag within the AI Indicators window to isolate a given time period and research the flagged anomaly

Clicking into a given anomaly record surfaces the information in the screenshot below. From this window we can easily see the suspected IPs – grouped by source & dest – (1) for this indicator. In addition, you can download the associated PCAPS (2) and dig into the subject flow logs (3). This level of detail provides you with the context necessary to quickly evaluate the anomalous activity and make decisions.

AI Indicators Example

In the example below, the intelligence-based alerts for the 10:00 pm hour do not show anything of interest. In fact, the events and indicators from this time period are all give a rank of 1 (the lowest possible score).

What this means is that traffic crossing the MixMode sensor has been noticed for some characteristic, which is why an indicator was raised, but type of indicator is determined to be benign so the risk and impact is diminished. This is essentially saying that from the perspective of known threats, attack signatures and malicious files, there is nothing of significant risk in the 10:00 a.m. timeframe.

Moving to the AI indicators for the same time we see a slightly different story. Looking at the same time period we can very easily see that the AI has produced a level 10 indicator for abnormal activity in the inbound flows. This is clearly shown both in the timeline view at the top (1) as well as the record details in the bottom (2).

From this screen we can click into the record details for this indicator where we can quickly identify the subject IP addresses (1), grouped by source & destination, download the PCAPS (2) and review the connection logs (3). This provides the security practitioner with everything that is needed to investigate and address this anomalous activity.

Conclusion

Cyber attacks are evolving quickly and protection at the perimeter through traditional methods is no longer sufficient.

State sponsored attackers and those leveraging adversarial AI can pass through traditional defenses, blend into an environment and remain undetected as long as necessary to carry out their malicious objective. Detecting these clandestine assaults requires complete visibility, vigilant monitoring and a thorough understanding of what is considered “normal” behavior in your environment.

The modern security practitioner must leverage a combination of traditional signature-based detections as well as (unsupervised) AI-informed anomaly tracking and detection to keep pace with the threat landscape.

MixMode Articles You Might Like:

Improvements to MixMode’s PQL: Packetsled Query Language

False Positives and Negatives: The Plague of Cybersecurity Software

How MixMode’s AI Builds Your Network’s Baseline

Turning the Unsupervised Tables on the Turing Test

Featured MixMode Client Success Story: HighCastle Cybersecurity

What is Network Detection and Response (NDR)? A Beginner’s Guide