As the Coronavirus spreads across the globe, growing fears have led to huge shifts in the economy, social interactions and business practices. Many companies are asking their employees to work from home and encouraging social distancing, which may keep them safer from catching COVID-19, but leaves businesses even more vulnerable to hackers looking to infiltrate their network.

While the world is scrambling to contain the pandemic, hackers have already begun crafting Corona-specific scams to exploit the global panic.

Phishing scams feeding off of Coronavirus fears have taken advantage of the current state of national emergency to trick concerned citizens into opening malicious PDF’s claiming to help protect them from the disease.

“Go through the attached document on safety measures regarding the spreading of coronavirus,” reads the message, which purports to come from a virologist. “This little measure can save you.”

Wired

If anything this proves how quickly hackers are able to adapt to current events and incorporate them into their crime. They change the subject of their emails depending on what most people will struggle to resist clicking on. So, during tax season you may receive a phishing email that claims to have urgent information about your tax return.

However, seasonally themed phishing emails have a far lower success rate than those written about natural disasters, political upsets, or global emergencies like the present one.

As hackers continue to develop their methods of phishing with advanced tools like Artificial Intelligence and Machine Learning they are becoming more and more capable of automating these systems and creating “individualized” email language that would appear completely normal to the human eye.

Certain adversarial Machine Learning algorithms are able to change email body sentences on a massive scale without messing up their grammar because all of the grammatical rules have been programmed in. In which case it’s nearly impossible for a human being to distinguish between an AI-crafted phishing email and a regular one.

This is where the need for AI in cybersecurity comes into play.

A cybersecurity system equipped with the right kind of AI would be able to catch even the most cleverly disguised hackers if developed using a Generative Unsupervised Learning training model.

Most of the current Artificial Intelligence platforms available on the market employ Supervised learning, or Second Wave AI, to catch breaches. However, Supervised Learning-trained AI is unable to catch something that it has never learned to expect, because it has to be told what something abnormal looks like.

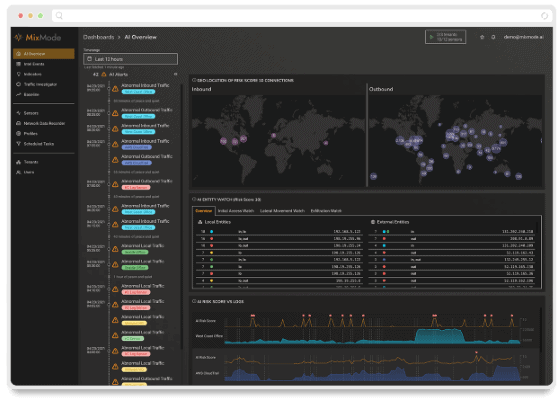

A Generative Unsupervised Learning model, on the other hand, is programmed to study the network and understand what the network should look like at all times, even five minutes into the future. That way, if something unusual appears on the network, the AI knows it should not be there because it knows what the network is supposed to look like and the abnormality does not fit in.

Book a demo of MixMode today.

MixMode Articles You Might Like:

Integrating MixMode with DeepInstinct

New Video: Why is network data the best source for actionable data in cybersecurity?

5 Cloud Security Challenges Facing Enterprises Today

The Top 8 Concerns for CISO’s in 2020

2019 Data Breaches By the Numbers

MixMode Now Supports Amazon VPC Flow logs

Featured MixMode Client Success Story: Nisos

A Well-Equipped Security Team Could Save You Millions of Dollars a Year