This is the final article of a three-part series about improving the black box approach to cybersecurity. Read part one here and part two here.

Making an Informed Cloud Security Decision

We aren’t saying that organizations using hybrid cloud solutions can’t land on siloed solutions that can ultimately provide an acceptable level of oversight. For example, an organization could put together a Frankenstein-esque set of solutions that include things like:

● A multi-cloud, log-based security platform like Lacework

● A machine learning team that examines forensic data

● A platform to manage flow logs

● A CloudTrail-focused tool

● Network traffic analysis tools

● Human power to drive the various tools

The list goes on. It’s no wonder so many organizations are seeking viable alternatives to this complicated, chaotic approach to cloud security.

The Role of AI in Cloud Security

While organizations don’t necessarily need to understand every technical aspect of how AI works, it’s a good idea to have a firm grounding in the basics when making choices about which AI solutions are trustable and reliable enough. Ideally, the AI itself should be able to explain to the operator why it is making decisions.

Many organizations rely on neural networks driven by machine learning that relies on the accuracy of manually constructed training data. Training data errors lead to overlooked anomalous behavior and, often, mountains of false positive and negative flags that have to be reviewed by human analysts.

The Insufficiency of Black Box Approaches in Today’s Threat Environment (and the Best Viable Alternative)

MixMode delivers massive efficiency effectiveness gains over traditional “black box” neural network solutions that deliver few actionable insights about threat behavior. The recent MIT research publication, “Why Are We Using Black Box Models in AI When We Don’t Need to? A Lesson from An Explainable AI Competition,” provides an excellent example of why black box solutions are insufficient and, often, a factor at play in end-user confusion:

“Our team decided that for a problem as important as credit scoring, we would not provide a black box to the judging team merely for the purpose of explaining it. Instead, we created an interpretable model that we thought even a banking customer with little mathematical background would be able to understand,” the paper reads. “The model was decomposable into different mini-models, where each one could be understood on its own. We also created an additional interactive online visualization tool for lenders and individuals. Playing with the credit history factors on our website would allow people to understand which factors were important for loan application decisions. No black box at all. We knew we probably would not win the competition that way, but there was a bigger point that we needed to make.”

We can also consider the Science Magazine article, “How AI detectives are cracking open the black box of deep learning.” Here, the author gives credit to advancements in neural network technology to deliver on certain tasks, but at the same time, how those advancements have yet to move beyond an ultimate black box result where the neural network delivers a result, but very little insight into how it got there — an approach clearly opposed to the goals of Cybersecurity.

“Today’s neural nets are far more powerful … but their essence is the same. At one end sits a messy soup of data—say, millions of pictures of dogs,” the author writes. “Those data are sucked into a network with a dozen or more computational layers, in which neuron-like connections’ fire’ in response to features of the input data. Each layer reacts to progressively more abstract features, allowing the final layer to distinguish, say, a terrier from a dachshund.”

“At first, the system will botch the job. But each result is compared with labeled pictures of dogs,” the article continues. “The outcome is sent backward through the network, enabling it to reweight the triggers for each neuron. The process repeats millions of times until the network learns — somehow — to make fine distinctions among breeds.”

Modern horsepower can create powerful neural networks, the author writes, but adds that this “mysterious and flexible power is precisely what makes them black boxes.”

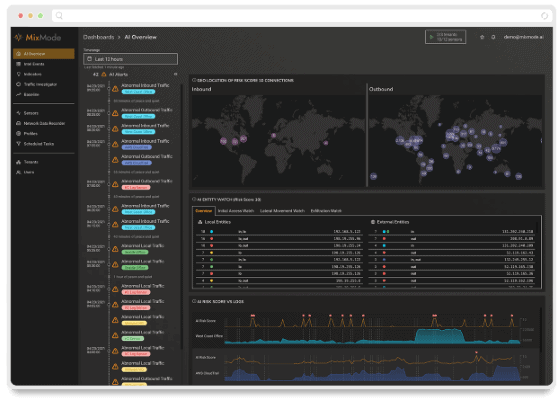

MixMode’s third-wave AI, free from the neural network model, explains exactly why anomalies are detected. MixMode uses aspects of spectral analysis to specifically explain how observed behavior differs from expected behavior. The result is a true AI-powered Cybersecurity approach that organizations can fully trust.

Learn more about MixMode, now available with integrated AWS and CloudTrail support, and set up a demo today.

MixMode Articles You Might Like:

Too Many Cooks in the Kitchen: Why You Need to Consolidate Your Cybersecurity Approach

Local U.S. Governments and Municipalities at Risk of Foreign Nation Cyber Attacks

Guide: Authentication Does Not Equal Zero Trust

MixMode Raises $45 Million in Series B Funding Round Led by PSG to Automate Cyberattack Detection

Video: Why a U.S. City Chose MixMode and Decommissioned their UBA Platform