Cybersecurity professionals are acutely aware of the ever-evolving threat landscape. As attacks become more sophisticated and data breaches continue to dominate headlines, the need for cutting-edge solutions is paramount. Artificial intelligence (AI) has emerged as a potential game-changer, promising faster threat detection, proactive prevention, and enhanced security posture.

MixMode just released our inaugural State of Cybersecurity 2024 report, offering invaluable insights on how organizations are harnessing AI’s power to bolster their defenses.

To help unlock the potential of artificial intelligence in cybersecurity, we’re going beyond the report with a compelling blog series delving deeper into specific findings. We’ll dive deeper into key findings that include:

- The current landscape of AI adoption: How widespread is AI usage, and what are the key challenges organizations face?

- How organizations utilize AI: How are organizations using AI for cyber defense, and how is it evolving to help cybersecurity teams stay ahead of the curve?

- The real-world benefits of AI: Dive into how AI saves organizations time, money, and valuable data.

If you haven’t already, download the report and follow along with the series to gain practical insights and strategies for implementing AI in your security framework.

Organizations Have Been Slow to Adopt AI

Despite AI’s potential to help defend against cyber attacks, AI adoption in cybersecurity practices remains in its early stages. Why is this the case, and how can organizations overcome these hurdles to pave the way for a secure future?

There are several key reasons why the cybersecurity space has been slow to adopt AI solutions from vendors despite the potential benefits:

1. Overhyped Expectations: Early AI solutions often made bold claims about their capabilities to enhance security infrastructure, leading to inflated expectations and subsequent disappointment when real-world performance fell short. This created a sense of skepticism among security teams.

2. Lack of Transparency and Explainability: Some AI algorithms’ “black box” nature made it difficult for security professionals to understand how decisions were made, hindering trust and confidence in their effectiveness.

3. Data Requirements and Integration Challenges: Training and deploying AI effectively often requires large amounts of high-quality data, which can be challenging for organizations to collect and integrate with existing security systems.

4. Skill Gap and Expertise: Implementing and managing AI solutions requires specific technical skills and expertise that may be limited within security operations centers. This can lead to implementation delays and ongoing struggles.

5. Budgetary Constraints: The cost of acquiring, implementing, and maintaining AI solutions can be significant, especially for smaller organizations. This can be a substantial barrier to adoption.

Understanding the Hesitation: Barriers to AI Adoption

While over half of organizations acknowledge the potential of AI, a mere 18% report full deployment and security risk assessment at each stage. This hesitancy can be attributed to several key factors:

1. Lack of Clarity on Use Cases: The vast potential of AI can be overwhelming, leading to confusion about where and how to best implement it. Organizations struggle to identify specific use cases that align with their unique needs and security priorities.

2. Data Challenges: Effective AI models require high-quality, relevant data. Many organizations lack the necessary data infrastructure, cleaning capabilities, and governance practices to ensure their data is suitable for AI training and deployment.

3. Talent and Expertise: Implementing and managing AI solutions requires specialized skills and expertise in data science, machine learning, and cybersecurity. Finding and retaining these professionals can take time and effort.

4. Explainability and Bias: Some AI models’ “black box” nature raises concerns about transparency and potential biases. Organizations worry about understanding how decisions are made and ensuring algorithms are fair and unbiased, especially in critical security applications.

5. Security Risks of the Technology: Ironically, the technology designed to enhance security presents potential cybersecurity risks. Concerns around vulnerabilities, adversarial attacks, and unintended consequences of AI models deter some organizations from embracing it wholeheartedly.

Fool me Once, Fool me Twice

Here are some ways security teams have been burned before with AI solutions:

- False positives: AI systems can generate a high number of false positives, wasting time and resources investigating non-threats.

- Limited threat detection: Some AI solutions struggle to detect unknown threats or sophisticated attacks, leaving organizations vulnerable.

- Integration issues: Integrating AI with existing security tools can be complex and time-consuming, leading to compatibility problems and performance issues.

- Lack of customization: Off-the-shelf AI solutions may not be able to adapt to an organization’s specific needs and environment, reducing their effectiveness.

Overcoming the Hurdles: Steps Towards Successful AI Adoption

Despite these challenges, the potential benefits of AI in cybersecurity are undeniable. Organizations can navigate these hurdles and chart a course toward successful AI adoption by taking the following steps:

1. Start Small and Specific: Instead of aiming for a massive overhaul, identify high-value use cases where AI can improve security. Begin with pilot projects in controlled environments, focusing on malware detection or log analysis tasks.

2. Invest in Data Infrastructure: Prioritize data quality and governance. Clean and organize your data, establish clear access controls, and ensure its relevance to the chosen AI use case.

3. Build Your Team or Partner: Collaborate with internal data science and security experts or consider partnering with external specialists to address talent gaps and access expertise.

4. Focus on Explainability and Fairness: Choose AI models and algorithms that offer transparency and interpretability. Implement robust testing and monitoring procedures to identify and mitigate potential biases.

5. Prioritize Security of the Tool: Implement comprehensive security measures for your AI solution, including vulnerability assessments, penetration testing, and regular security updates.

The Future of AI in Cybersecurity: A Glimmer of Hope

While the current adoption rate suggests a gradual path forward, the future of AI in cybersecurity is undeniably bright. As organizations overcome the initial challenges and gain experience, we can expect the following:

1. Growing Confidence and Expertise: Improved understanding of AI capabilities and successful use cases will build trust and encourage wider adoption.

2. Advancements in Explainable AI: Developments in the field of Explainable AI (XAI) will address concerns about transparency and bias, making AI models more trustworthy.

3. Democratization of AI Tools: User-friendly, pre-trained AI solutions will empower organizations with limited resources to benefit from AI technology.

4. Continuous Learning and Adaptation: AI models will become more dynamic and self-learning, able to continuously adapt to evolving threats and improve their effectiveness over time.

5. Collaboration and Shared Knowledge: Increased collaboration between vendors, researchers, and users will accelerate innovation and knowledge sharing, benefitting the cybersecurity ecosystem.

How MixMode Can Help Security Teams with AI Adoption

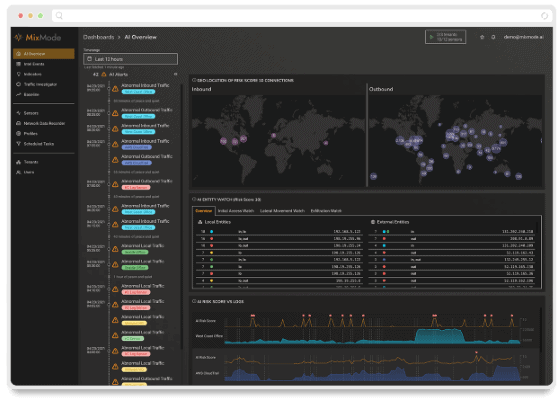

MixMode makes it easy for organizations to implement an AI-powered threat detection solution and can be remotely installed in minutes. MixMode’s AI starts learning about a network immediately, unsupervised and without human input, customizing to the specific dynamics of individual networks to help security teams in several key ways:

1. Adaptability and Self-supervised Learning: Unlike traditional machine learning models that rely on static rules and signatures, MixMode’s AI is built on the principles of dynamical systems, allowing it to continuously adapt to the unique dynamics of each network. This means it can identify evolving anomalies and suspicious patterns in real-time, even zero-day attacks, without requiring constant updates or manual tuning.

2. Contextual Awareness: MixMode’s AI analyzes data beyond individual events, considering contextual information like user behavior, network activity, and other key data points. This richer understanding enables it to differentiate between harmless activities and potential threats, significantly reducing false positives and allowing analysts to focus on the most critical incidents.

3. Proactive Threat Detection: Instead of simply reacting to alerts, MixMode’s AI can proactively identify abnormal activity and predict potential attacks before they occur. This enables security teams to take preventive measures and mitigate risks before they escalate into major incidents.

4. Advanced Attack Recognition: Traditional detection methods often struggle with sophisticated attacks, especially those leveraging AI. MixMode’s AI is designed to recognize and counter complex threats, including adversarial AI-based attacks. Utilizing self-supervised learning allows MixMode’s AI to adapt to new tactics and evade attempts to mimic its behavior, leaving attackers disadvantaged.

5. Streamlined Workflow and Efficiency: MixMode’s AI frees up valuable time and resources for security teams by automating much of the detection process. This allows them to focus on high-level investigations, incident response, and strategic planning while the AI handles the heavy lifting of continuous threat monitoring.

Overall, MixMode’s advanced AI empowers security teams with:

- Enhanced visibility and awareness of their network environment.

- Faster and more accurate detection of threats, including novel and sophisticated attacks.

- Reduced workload and improved efficiency for security personnel.

- Proactive defense capabilities to mitigate risks and prevent incidents.

The road to successful AI adoption in cybersecurity requires careful planning, collaboration, and a commitment to addressing potential risks. By taking a measured approach and focusing on practical applications, organizations can unlock the immense potential of AI and build a more secure future for all.

Contact us If you’d like to dive deeper into specific use cases or explore how MixMode can help AI enhance your security defenses.

Other MixMode Articles You Might Like

The Current State of SOC Operations Shows The Escalating Need for AI in Cybersecurity

MixMode Releases the First-Ever State of AI in Cybersecurity Report 2024

Harnessing the Power of Advanced AI to Optimize Security

Todd DeBell of MixMode Recognized as 2024 CRN® Channel Chief

Driving Towards Zero-Days: Hackers Take Turns Uncovering Exploits at Pwn20wn

Augmenting Legacy Controls with AI-driven Threat Detection and Response